Amsterdam Modeling Suite Software Usage on Penn State’s Roar Computer Clusters

Submission navigation links for Project

Submission information

Submission Number: 78

Submission ID: 110

Submission UUID: a21e9844-8ffc-4624-b397-865cb8a42151

Submission URI: /form/project

Created: Tue, 11/17/2020 - 14:42

Completed: Tue, 11/17/2020 - 15:24

Changed: Tue, 08/02/2022 - 15:05

Remote IP address: 98.235.167.220

Submitted by: Jeffrey J. Nucciarone

Language: English

Is draft: No

Webform: Project

Amsterdam Modeling Suite Software Usage on Penn State’s Roar Computer Clusters

Complete

Project Leader

Project Personnel

Project Information

Penn State recently acquired licenses for the Amsterdam Modelling Suite (AMS) package. The AMS is a collection of software tools for performing computational chemistry calculations. The AMS-jobs graphical user interface serves as a job management interface. AMS-input is used to model the required structures. The AMS suite has native code to perform density functional theory (DFT) calculations. The ADF and BAND codes work on molecular and periodic systems. The AMS suite can also be used as a portal to create input files, submit jobs, and analyze results for other popular computational chemistry codes. Semi-empirical codes such as DFTB+ and plane-wave DFT codes such as Quantum Espresso (QE) and Vienna Ab-initio Simulation Package (VASP) calculations can be performed using the AMS suite. Additionally, with the setup of a proper secure shell (ssh) portal and defining the necessary queues in the AMS-jobs interface, remote jobs can be submitted directly from the AMS suite. Further AMS-jobs acts as a convenient interface to monitor the remote-jobs, handles the transfer of input files to the remote server and on completion of the job automatically retrieves the relevant output files. Analysis of results can be done conveniently with the various visualization tools included in the AMS suite. These convenient factors make the AMS suite a promising candidate to simplify the workflow of computational chemistry research projects.

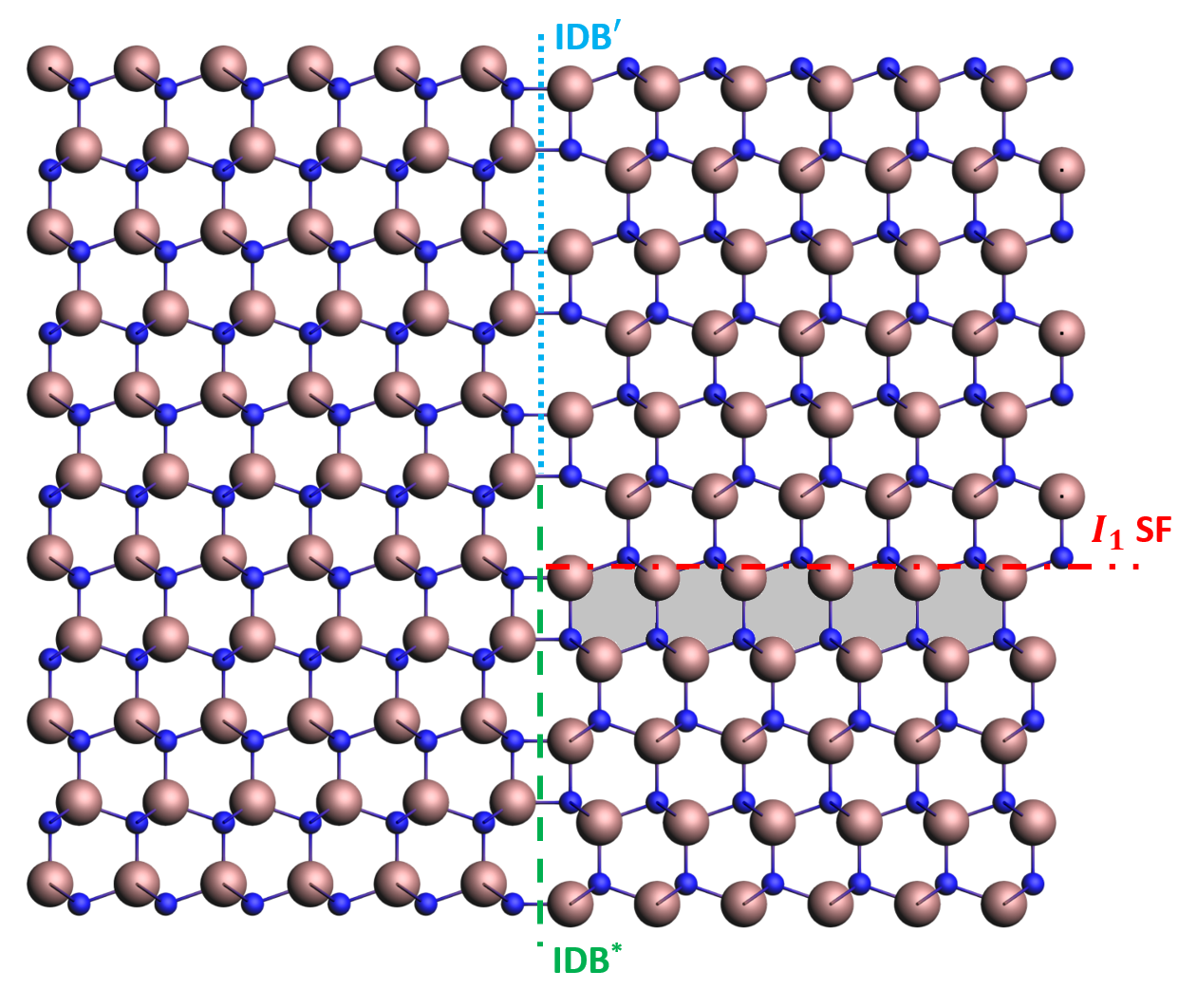

This project aims to document the necessary steps to connect the AMS suite with Penn State’s Roar supercomputer. Calculations and analysis of results for a current research project on inversion domain boundaries in Wurzite crystal structure will be performed as a proof of concept.

This project aims to document the necessary steps to connect the AMS suite with Penn State’s Roar supercomputer. Calculations and analysis of results for a current research project on inversion domain boundaries in Wurzite crystal structure will be performed as a proof of concept.

Project Information Subsection

Documentation to assist new users of the AMS suite with Roar Supercomputer. These instructions can serve as a base for other institutions to set up AMS in a similar fashion, providing desktop users the ability to interface directly with central HPC resources.

Results for publication on inversion domain boundaries in Wurzite crystal structure.

Results for publication on inversion domain boundaries in Wurzite crystal structure.

{Empty}

Graduate student in physics/materials science

{Empty}

Practical applications

{Empty}

Penn State University - Altoona Campus

3000 Ivyside Park

Altoona, Pennsylvania. 16601

Altoona, Pennsylvania. 16601

CR-Penn State

12/01/2020

No

Already behind3Start date is flexible

6

{Empty}

03/10/2021

{Empty}

01/12/2022

- Milestone Title: Establish an AMS jobs interface to Roar supercomputer job submission pipeline.

Milestone Description: Set up required ssh portal from AMS suite to Roar supercomputer. Define relevant queues in AMS-jobs GUI. Submit a test job to the Roar supercomputer. Document all instructions for future users.

Completion Date Goal: 2021-01-15 - Milestone Title: Setup calculations for the research project on inversion domain boundaries in Wurzite crystals.

Milestone Description: Create input supercell structures containing inversion domain boundary models using AMS-input GUI. Submit Quantum Espresso jobs to a remote server, to compute formation energies of defined supercells.

Completion Date Goal: 2021-03-31 - Milestone Title: Analysis of Results

Milestone Description: Provide details on the process of analyzing results to compute the formation energies of the inversion boundary models. Provide details on the usage of AMS suite to produce visualizations of results that lead to useful scientific observations. Finalize all documentation.

Completion Date Goal: 2021-05-07

{Empty}

{Empty}

Inversion Domain Boundaries in Wurzite Crystal Structure.

Submitting and managing jobs on the remote server with external tools.

A scientific approach to analyzing complex data.

A scientific approach to analyzing complex data.

{Empty}

Potential democratization of HPC resources by showing how a desktop application can be seamlessly integrated into a central HPC resource.

Access to Penn State ICDS ROAR HPC system

{Empty}

Final Report

The project developed a method to leverage computing from a laptop to a remote-based back-end HPC cluster without the end-user needing to run through multiple login steps or manual file transfers.

The integration of other scientific computing applications that have a native front end to integrate with back-end HPC systems without relying on other portals.

Other projects that use a similar approach to extending local to HPC resources have the potential to reduce the requirements for large front-end interactive systems needed to support classroom or other mass-login uses. The use of the vendors' own front end requires the queuing system for the HPC resources to be set up to support these multiple remote requests.

There is a lower entry barrier to getting started as incoming students or other researchers do not have to be excessively fluent in how the back-end resources operate.

Implementation and system engineers will have to understand how these types of applications will integrate with the HPC systems. The current nature of some of these applications is not as mature as we would like it to be, resulting in a lot of technical assistance to be provided in its current form. This project helped expose some of these weaknesses.

See above.

By simplifying and unifying integration from local to remote HPC resources we can make it easier to share results across multiple disciplines and allow greater sharing and collaboration.

This project helped lay the groundwork for the further democratization of high-performance computing resources, bringing it out of its more traditional "cloistered" environment of login nodes and captive portals and directly into end-use, locally run, and managed applications.

The original goals of this project were more ambitious than we realized. The vendor's software suite did not provide for a robust enough interface to the PSU HPC cluster queueing and job management system, as the site used a non-standard way of interfacing the management and queuing systems. These "extra" messages generated by the queue system were enough to confuse the vendor's API. Given more time we could have solved the issue but this part was moving beyond the original scope of the project. The project did provide us with a good feasibility study where we can continue to pursue the original approach.

Despite some of the technical limitations the project encountered, we demonstrated that a local application run on a laptop could be extended to use available HPC resources to run problems more complex than can be handled by a local system. The researcher can set up and test their models locally and then seamlessly run on the back-end HPC system, and then automatically have the results return to the local system for further analysis.